Viewpoint Simulation for Coverage Planning

Overview

The viewpoint_planning package provides a ROS-based framework to spawn a sensor in a Gazebo simulation environment and capture depth and color images from various poses. This package includes the URDF model of the sensor, necessary plugins for depth and color image capturing, and a ROS service to manage the sensor's pose and data capture.

This package is meant as a wrapper for simulating the results of algorithms designed for solving the Coverage Viewpoint Problem.

Features

- URDF Model: Detailed URDF model for the structured light sensor.

- Gazebo Integration: Simulate the sensor in a Gazebo environment with depth and color image capturing capabilities.

- ROS Services: Spawn the sensor at specified poses and capture sensor data.

- TF Management: Manage TF frames for accurate pose representation and data alignment.

Installation

Prerequisites

- ROS (Robot Operating System) Noetic

- Gazebo

- Necessary ROS dependencies

Clone the Repository

cd ~/catkin_ws/src

git clone https://github.com/FarStryke21/viewpoint_planning.gitBuild the package

cd ~/catkin_ws

catkin build

source devel/setup.bashUsage

Launch Simulator

roslaunch viewpoint_planning viewpoint_test.launchSpawn the target model

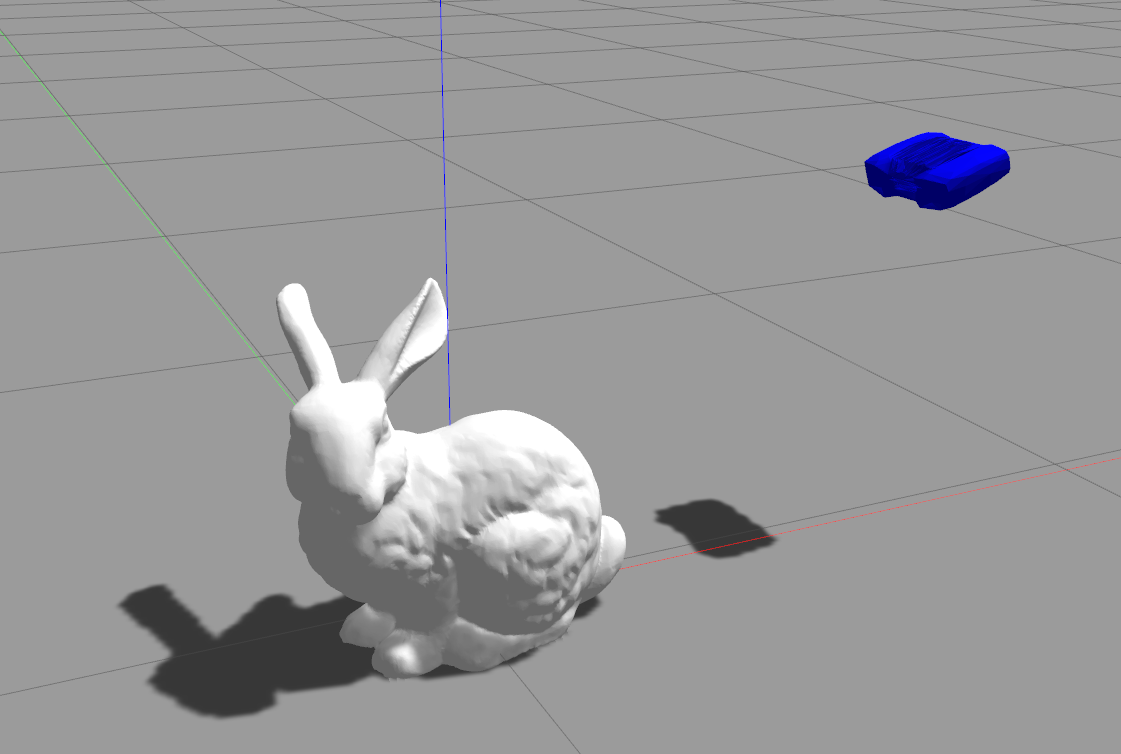

Define the Gazebo model for your target object in the gazebo_models directory. Call the model using the appropriate rosservice.

rosservice call /load_mesh "mesh_file: 'test_bunny'"

Set initial pose

The pose of the sensor can be changed by sending request calls to /gazebo/set_model_state. Your viewpoint manager should send this service requests. You can also send service commands from a command line terminal or the Gazebo window.

An example of pose sent to the sensor looking directly down is given here:

rosservice call /gazebo/set_model_state "model_state: { model_name: 'structured_light_sensor_robot', pose: { position: { x: 0, y: 0, z: 1.0 }, orientation: { x: 0.0, y: 1.0, z: 0.0, w: 0.0 } }, twist: { linear: { x: 0.0, y: 0.0, z: 0.0 }, angular: { x: 0.0, y: 0.0, z: 0.0 } }, reference_frame: 'world' }"Capture the surface

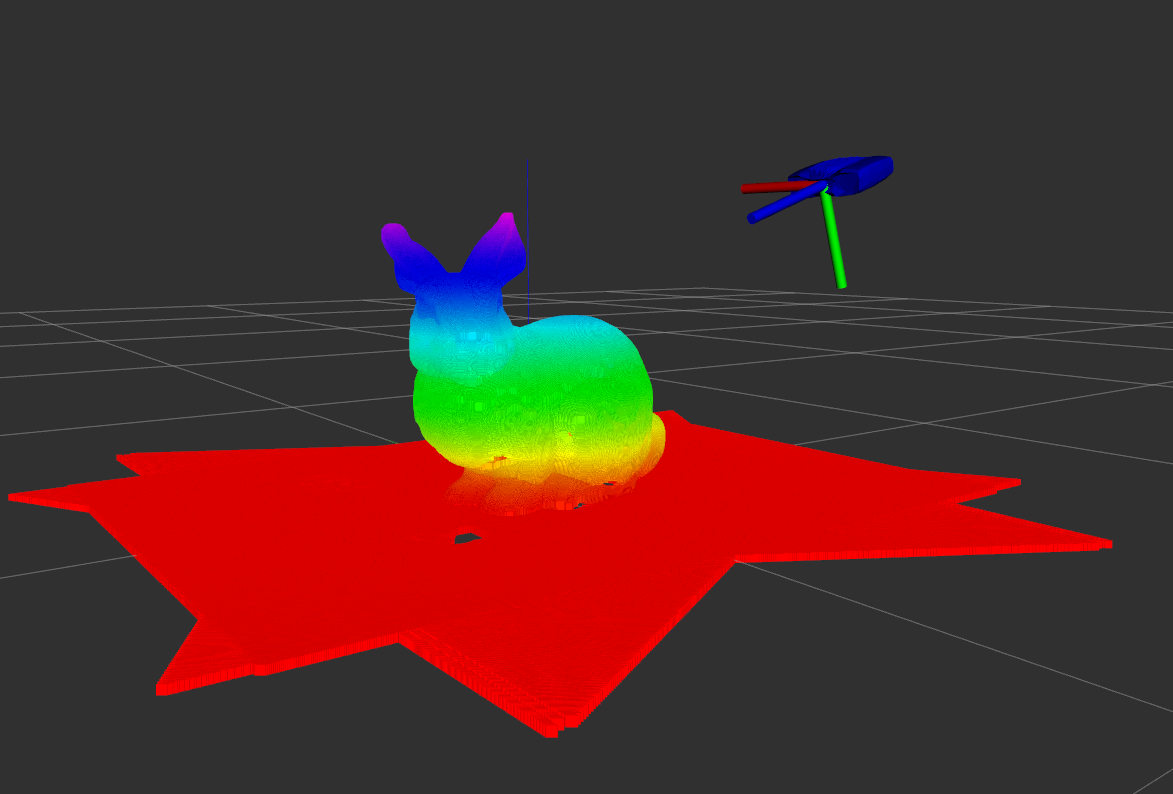

Surface capture requests can be made through the following rosservice:

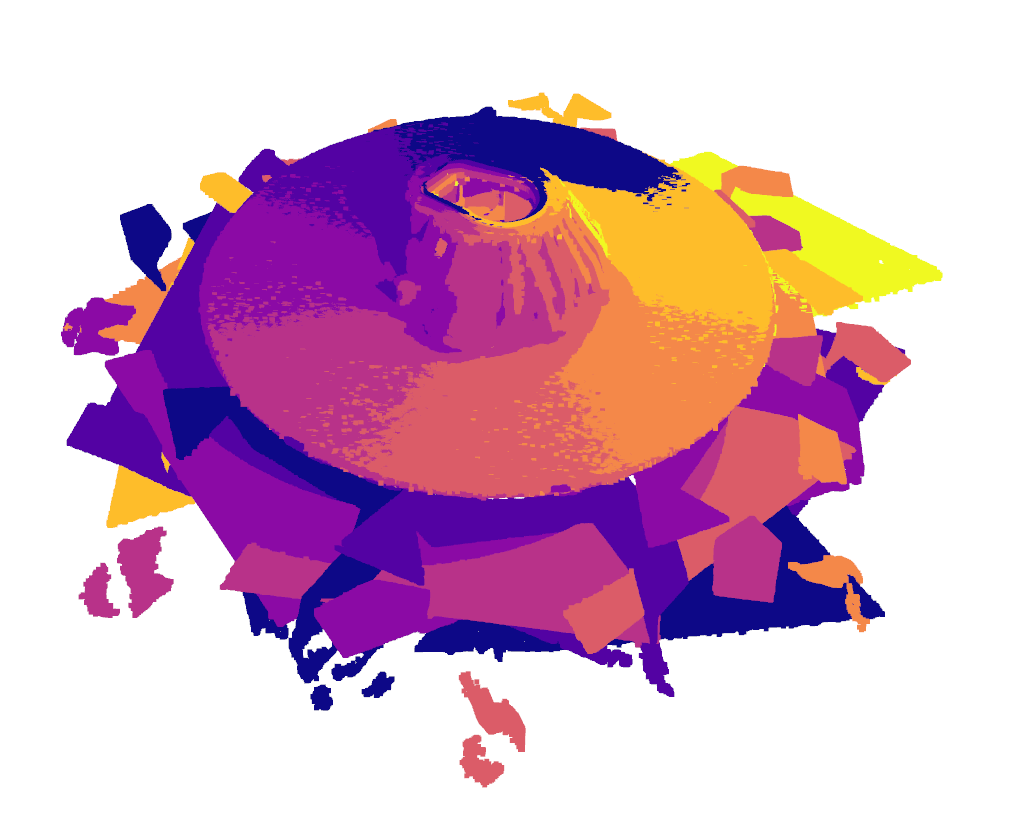

rosservice call /capture_surfaceThis publishes two pointclouds, \current_measurement which provides the last captured surface, and \accumulated_surface which provides the combined surface clouds. The messages are only published during service calls.

Files and Directories

- urdf/: Contains the URDF model files.

- launch/: Contains launch files for starting the Gazebo simulation and RViz.

- meshes/: Contains the mesh files for the sensor model.

- gazebo_models/: Contains the gazebo model descriptions for the target objects.

- config/: Contains configuration files for RViz and Gazebo.

- src/: Contains the source code for the ROS package.

- srv/: Contains the service descriptions for the ROS package.

- CMakeLists.txt: CMake build script.

- package.xml: Package manifest.

Contact

For issues, questions, or contributions, please contact:

Author: Aman Chulawala

GitHub: FarStryke21

Contributions and feedback are always welcome!