SAC Policy for Autonomous Vehicles

Problem Statement

Perception system is of crucial importance in autonomous driving, although the prosperity of deep learning has brought huge success in different tasks like image classification, semantic segmentation, and object detection, these deep neural networks are often vulnerable against adversarial attack. How to build a robust perception model under different conditions (e.g. different orientations, different illumination conditions, or even different attacks) is still an open questions in the frontier for autonomous driving researchers.

The objective of this project was divided into two parts. Part A required acting as an agent of chaos and launching adversarial attacks onto an incoming vehicle with the intention of portraying incorrect information about the environment. Part B required training an agent using the Soft Actor Critic method to drive with confidence in adverse environment.

Part A

An adversarial attack on an agent refers to the intentional perturbation or manipulation of the agent’s environment or its observations in a way that leads to suboptimal or undesired behaviors. These attacks exploit vulnerabilities in the agent’s learning and decision-making processes to degrade its performance, potentially causing it to make poor decisions or fail to achieve its objectives.

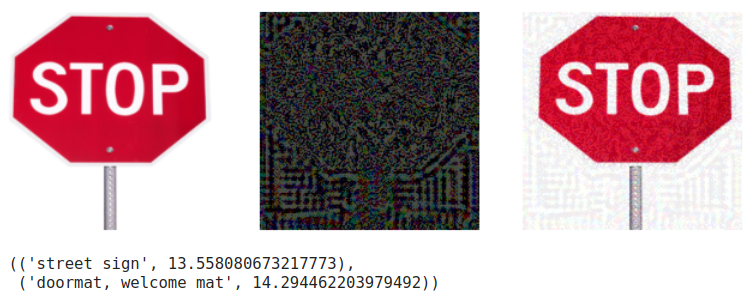

Our choice of an adversarial attack was on the stop sign that an agent would see on the road. Incorrect interpretation of these signs can be very dangerous in a real scenario. We were able to devise several successful adversarial patches using traditional occluding and the scratchai package. we implemented several adversarial vectors like Random Perturbation, Fast Gradient, Project Gradient Descent and more.

Part B

Soft Actor-Critic (SAC) is an advanced reinforcement learning algorithm that combines aspects of both value-based and policy-based methods. It’s part of the family of actor-critic methods, where the actor refers to the policy function and the critic refers to the value function.

For driving, SAC is preferable because it can handle complex, nonlinear dynamics and adapt to varying conditions in real-time, optimizing for long-term safety and efficiency. PID controllers, while simple and effective for linear, well-understood systems, lack the adaptability and optimality needed for dynamic, high-dimensional driving environments.

The second part of the project focussed on developing a good SAC agent for autonomous driving, and demonstrating how it performed better than traditional PID controllers.

For this portion, we made use of the CARLA simulator to create adverse environments for driving a car. This included scenarios like unexpected pedestrians jaywalking, occluded pedestrians, randomly stopping lead car, emergency braking, taking turns while navigation traffic, and much more. The agents performed reasonably well given the training data, but there is a definite scope for improvement in the performance. Nevertheless, we demonstrated the limitation of PID controllers when encountering non linearity and established the supremacy of Reinforcement Learning for driving vehicles.