Pose Estimation: Understanding what is where?

Pose estimation refers to the process of determining the position and orientation of objects in a given scene. It involves calculating the spatial relationship between the camera and the object, often represented as a 6-DoF (six degrees of freedom) pose consisting of three translational and three rotational parameters.

Why do we need to know where the objects are? The answer is simple: to interact with them. In the context of robotics, pose estimation is a fundamental task that enables robots to perceive and understand the environment, which is essential for various applications such as object manipulation, navigation, and human-robot interaction.

Much work has been done in the field of pose estimation, and various methods have been proposed to solve this problem. These methods can be broadly categorized into two types: model-based and data-driven approaches. Model-based methods rely on a 3D model of the object to estimate its pose, while data-driven methods use machine learning techniques to learn the pose directly from the data.

Getting some context

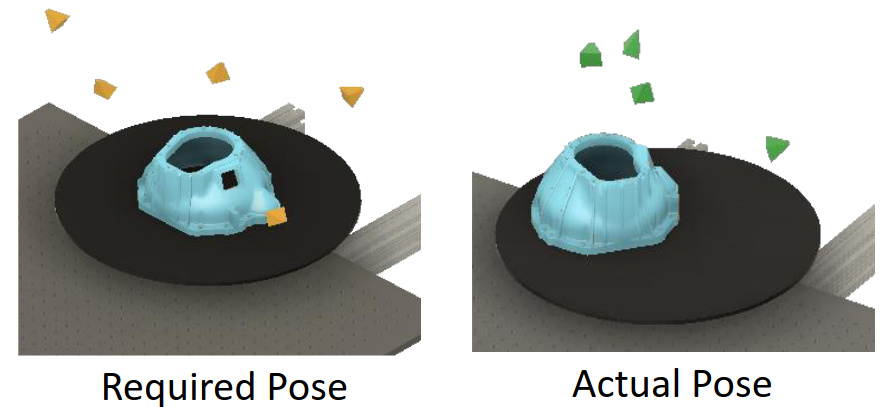

Take an example of the Metrology Pipeline. We are capturing the surface of the object using a 3D scanner. For this purpose we calculate the set of viewpoints we need to orient the sensor to capture the entire surface of the object. However, these poses are calculated with a given pose of the object. If the object is not in the correct pose, the calculated poses will not be able to capture the entire surface of the object. This is where the pose estimation comes into play. We estimate the pose of the object and then correct the set of viewpoints to capture the entire surface of the object.

Our area of interest lies in the development of model-based methods for pose estimation. We are currently working on a project that aims to estimate the pose of objects using a point cloud. We use a structured light sensor capture the surface area and want to limit estimating the pose without changing the sensor.

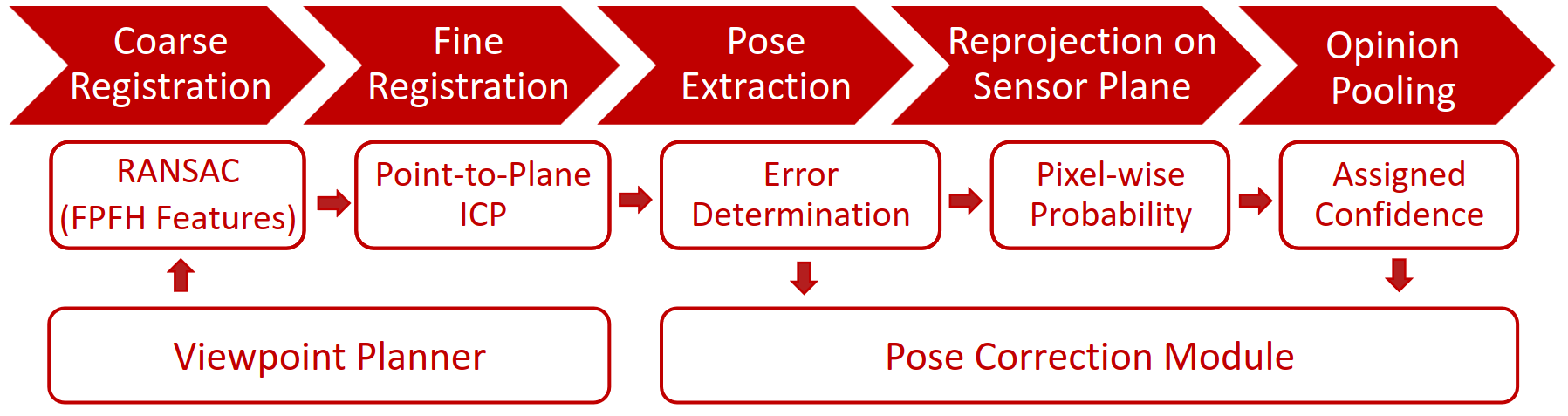

Global Registration

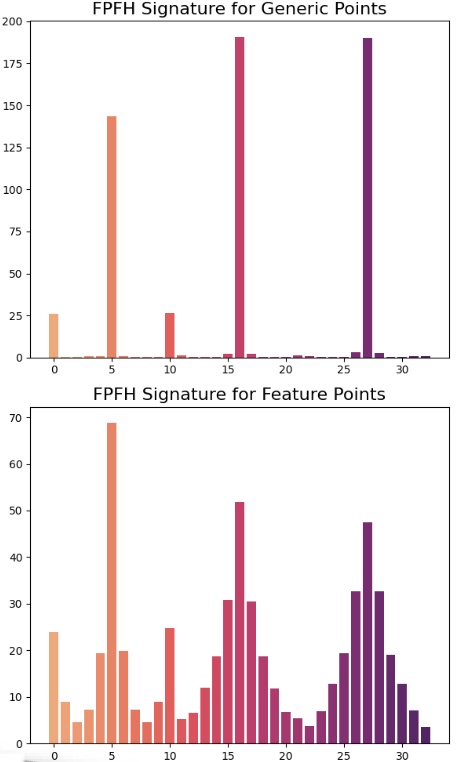

The Fast Point Feature Histograms (FPFH) is a feature descriptor that is used to describe the local geometric properties of a point in a point cloud. It is an extension of the Point Feature Histograms (PFH) descriptor, which captures the relationships between the point and its neighbors by computing the histogram of the geometric properties of the point pairs. The FPFH descriptor improves the efficiency of the PFH descriptor by introducing a fast and robust method for computing the local reference frame of the point, which is used to align the point pairs before computing the histogram.

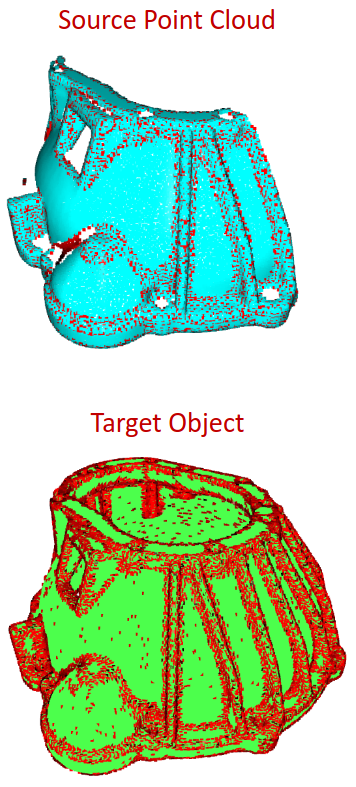

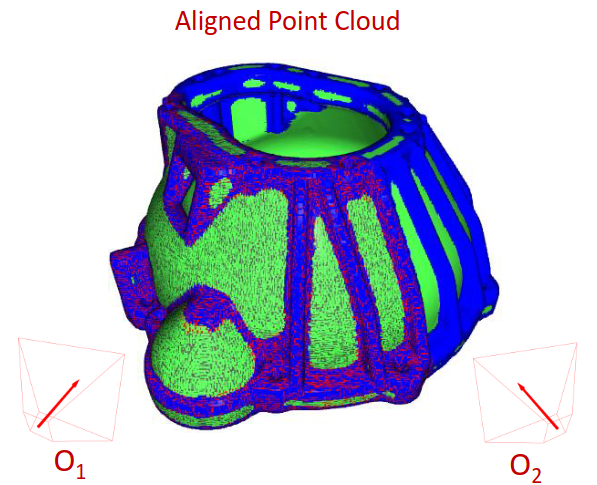

We leverage the FPFH descriptor to estimate the pose of objects in a point cloud. We first extract the FPFH features from the point cloud and then match them with the features of the object model to estimate the rough pose. This rough global registration takes place using a down-sampled point cloud and using Random Sample Consensus (RANSAC) to fit the points.

Fine Registration

The output of the global registration provides a warm start to the fine registration algorithm. We use the Point-to-Plane Iterative Closest Point (ICP) algorithm to refine the pose estimate. The ICP algorithm iteratively aligns the point cloud with the object model by minimizing the distance between the corresponding points. We use the FPFH features to compute the correspondences between the point cloud and the object model, which are then used to update the pose estimate.

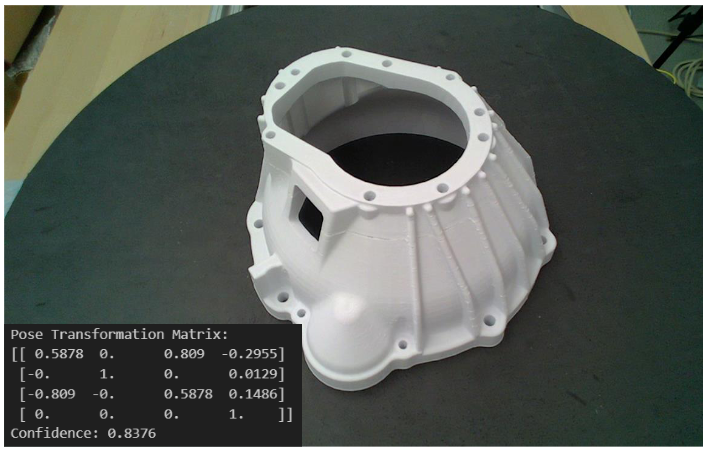

The output of the fine registration provides a transform which can be used to estimate the pose of the object.

Implementation

The current version of the pose estimation pipeline is implemented in Python using the Open3D library along with our in-house ROS bindings for point cloud data. The current implementation takes on average 3.3 seconds to capture the rough scan for the pose module, and the pose estimation engine provides a result in under 4 seconds. The entire pipeline can provide a pose estimate in under 8 seconds, and is currently available as a ROS Node for use in ROS based systems.

Future work includes improving the performance by leveraging the Point Cloud Library (PCL) for feature extraction and aligning. The feature extraction process make it an ideal candidate for GPU processing. The final objective is to have a pose estimate rate of 1 Hz or more for live object tracking during the scanning process.