Building a Diffusion Model

Introduction to Diffusion Models

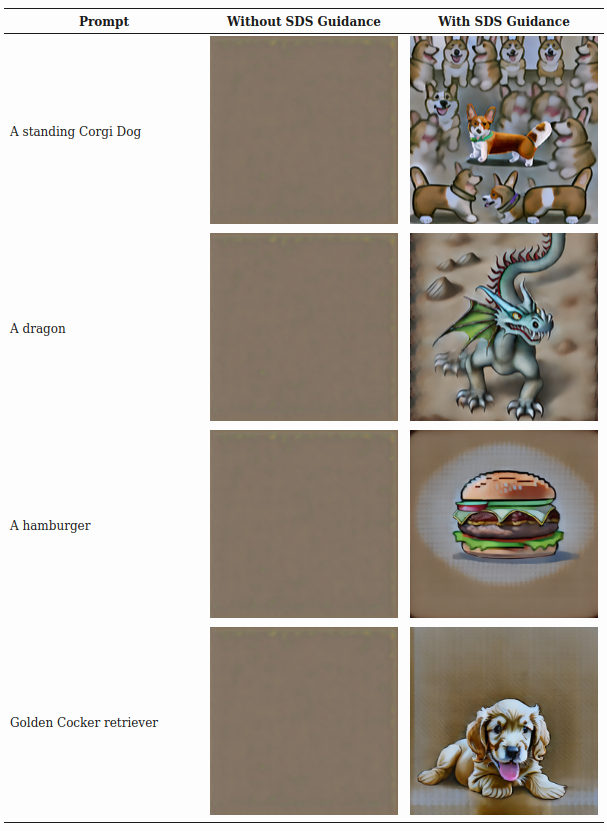

Diffusion models are a class of generative models that have gained significant attention in recent years, particularly in the field of 3D vision. These models are designed to generate data similar to the data on which they are trained, making them powerful tools for tasks such as 3D reconstruction, object generation, and scene understanding.

Key Concepts

- Latent Variable Model: Diffusion models map to the latent space using a fixed Markov chain.

- Forward Process: Gradually adds noise to the data.

- Reverse Process: Transforms noise back into a sample from the target distribution.

Advantages of Diffusion Models in 3D Vision

- State-of-the-art image quality

- No need for adversarial training

- Scalability and parallelizability

- Ability to generate smooth, continuous surfaces

- Better handling of complex, high-dimensional data

Applications in 3D Vision

3D Object Generation

Diffusion models can be used to generate 3D objects with high fidelity and diversity. For example, Viewset Diffusion uses multi-view 2D data to train a diffusion model for generating 3D objects.

3D Reconstruction

RealFusion utilizes the prior captured in the diffusion model to reconstruct a 360° 3D model of an object from a single image.

View Synthesis

Diffusion models can generate novel views of 3D scenes, enabling applications in virtual and augmented reality.

Scene Understanding

By learning to denoise 3D data, diffusion models can contribute to better scene understanding and object recognition in 3D environments.

Recent Advancements

RenderDiffusion

RenderDiffusion is the first diffusion model for 3D generation and inference that can be trained using only monocular 2D supervision. It introduces a novel image denoising architecture that generates and renders an intermediate three-dimensional representation of a scene in each denoising step.

Denoising Diffusion via Image-Based Rendering

This approach introduces a new neural scene representation called IB-planes, which can efficiently and accurately represent large 3D scenes. It proposes a denoising-diffusion framework to learn a prior over this 3D scene representation, using only 2D images without the need for additional supervision signals.

GSD: View-Guided Gaussian Splatting Diffusion

GSD is a diffusion model approach based on Gaussian Splatting (GS) representation for 3D object reconstruction from a single view. It utilizes an unconditional diffusion model to generate 3D objects represented by sets of GS ellipsoids, achieving high-quality 3D structure and texture.

| Prompt | Depth Map | RGB Visuals |

|---|---|---|

| A standing Corgi Dog | ||

| Castle on a hill | ||

| Race Car |

Challenges and Future Directions

- Improving computational efficiency for large-scale 3D scenes

- Enhancing multimodal fusion for better 3D understanding

- Exploring large-scale pretraining for improved generalization across 3D tasks

- Addressing the challenges of handling occlusions and varying point densities in 3D data

Conclusion

Diffusion models have shown great promise in advancing the field of 3D vision. By leveraging their ability to model complex data distributions and generate high-quality 3D content, researchers are pushing the boundaries of what's possible in 3D reconstruction, generation, and understanding. As the field continues to evolve, we can expect to see even more innovative applications of diffusion models in 3D vision tasks.

References

- Anciukevicius, T., et al. (2024). RenderDiffusion: Image Diffusion for 3D Reconstruction, Inpainting and Generation. arXiv preprint.

- Henderson, P., et al. (2024). Denoising Diffusion via Image-Based Rendering. arXiv preprint arXiv:2402.03445.

- Diffusion Models in 3D Vision: A Survey. (2024). arXiv preprint.

- Introduction to Diffusion Models for Machine Learning. (2022). AssemblyAI Blog.

- Mu, Y., et al. (2024). GSD: View-Guided Gaussian Splatting Diffusion for 3D Reconstruction. arXiv preprint arXiv:2407.04237.